From Hidden to Hero: Low-Level Technical Metadata Catalogs’ Relevance for the Future of Lakehouses

Evolution of Metadata Catalogs in Lakehouses

In the traditional world of data warehousing, metadata catalogs quietly operated behind the scenes. They were simple data dictionaries within a monolithic system with tight storage, computing, and query optimization integration. With only one engine to worry about and a relatively static environment, these catalogs could remain low-profile, storing basic schemas and indexes. In contrast to metadata catalogs, the data warehouse engine handled the heavy lifting.

Fast forward to the lakehouse era—where open storage formats, decoupled computing, and multiple engines share the same underlying data. Here, the low-level metadata catalog has stepped into the spotlight, transitioning from a hidden internal component to a critical infrastructure service. To understand this shift, let’s first explore the lakehouse definition: a data management paradigm that combines the best features of data warehouses and datalakes, providing a unified platform for storing, processing, and analyzing data: structured, semi-structured, and unstructured.

The separation of storage and compute, combined with the emergence of ACID-capable open table formats like Apache Iceberg,Delta Lake, and Apache Hudi, means the catalog now manages detailed snapshots, file-level statistics, and transactional commits. As multiple query engines (Spark, Trino, Flink, and others) rely on this catalog, it’s become indispensable for performance optimization, consistent schema evolution, and interoperable time-travel queries.

We’ve compiled this post to explore how the low-level metadata catalog was once abstracted inside the warehouse, how it’s now the linchpin of the lakehouse, and why its future role promises even more value. We’ll also distinguish it from business catalogs focusing on human-friendly governance and discovery. In the end, we’ll also discuss some potential interesting areas of expansion of the scope of the metadata serving from this catalog to serve richer metadata to help query engines optimize their table scans out of the box.

The Warehouse Era: A Simpler Catalog

In this era, data warehouses served as a central repository for all data operations. Storage, compute, and metadata management were merged into a single, tightly integrated environment. As a result, the need for external cataloging layers was minimal, and the engine itself maintained a straightforward registry of tables and schemas. What followed was a structure in which simplicity reigned for operational and governance purposes, with any separate “business catalog” focusing purely on higher-level elements such as definitions, classifications, and lineage.

Single Engine, Integrated Stack:Storage, computing, and metadata management were all part of one tightly coupled system. The internal metadata catalog was deeply embedded and fundamentally served as a data dictionary—storing table schemas, column definitions, and perhaps some indexing information.

Minimal Complexity, Minimal Exposure:The data warehouse engine handled transactions and schema changes internally. Since no other engine needed direct access to this metadata, it could stay hidden and relatively simplistic.

Business Catalog as a Governance Layer:If a separate business-oriented catalog existed, it would focus on higher-level attributes: business glossaries, compliance tags, and data lineage. It wouldn’t need to handle file-level details or time-travel states because the warehouse engine handled such complexities internally.

Result

The reliance on a low-level metadata catalog for performance, scaling, or preparedness for multi-engine concurrency querying was the least. The catalog’s role was not prominent; it was easily overlooked, so it was not a first-class citizen.

The Lakehouse Paradigm: Decoupled Storage and Compute

Modern architectures are moving toward Lakehouses, merging the cost-efficiency and openness of data lakes with data warehouses’ reliability and performance features. The storage layer (often cloud object storage) is separated from compute engines, and multiple analytical frameworks can operate directly on shared datasets. We’ll now see how this translated into a changing narrative in the data world.

Key Shifts in the Lakehouse

- Open Table Formats as the Foundation:

Technologies like Apache Iceberg, Delta Lake, and Apache Hudi bring ACID compliance to file-based storage. These table formats are no longer hidden away in a proprietary engine. Instead, the low-level metadata—snapshots, transaction logs, and file manifests—becomes the universal language of the lakehouse architecture. - Multiple Engines, One Source of Truth:

Different query engines each bring unique strengths. Spark might handle batch processing, Trino could offer SQL-based interactive queries, and Flink might manage streaming workloads. All these engines rely on the same underlying datasets. Thus, they must share a consistent “source of truth” for metadata, and the low-level catalog provides precisely that. - Externalized, Engine-Agnostic Metadata Layer:

Instead of being buried inside one engine, the low-level metadata catalog is an independent service. It is now a critical piece of infrastructure that all engines consult for table versions, schema information, file-level statistics, and commit details.

Business Catalogs Vs. Technical Metadata Catalogs

To manage and govern data effectively, two types of catalogs often take center stage. We have understood both in this blog, but let’s focus a little more on their differences and use cases. Business catalogs speak the language of governance, glossaries, and compliance. Low-level metadata catalogs hold the technical keys to performance and optimization. Let’s now ascertain the distinctions between them to gain a better understanding.

Business Catalog

- Focus: Human-friendly metadata (business terms, lineage diagrams, stewardship roles, compliance tags)

- Audience: Data analysts, stewards, and governance teams

- Complexity: Provides high-level context but, doesn’t store detailed file references or low-level snapshot states

Low-Level Metadata Catalog

- Focus: Detailed technical metadata (snapshots, manifests, partition-to-file mappings, column-level statistics)

- Audience: Query engines and systems that need fine-grained data insights for optimization and consistency

- Complexity: Must scale to billions of files, handle frequent commits, and serve partition stats in milliseconds

Why Low-Level Metadata Catalogs are Becoming Data-Intensive

While business catalogs focus on data governance and human-friendly metadata, low-level metadata catalogs have evolved into performance-critical services. They manage massive file counts, snapshot histories, and partition-level statistics at rapid speeds. With petabytes of data spread across millions of Parquet files, the low-level metadata catalog must answer detailed questions at scale:

High Write Throughput

Constant ingestion of new data creates snapshots every few minutes or even seconds. Each commit must be recorded atomically, ensuring data quality and consistency.

Complex Transaction Coordination

Multiple writers and readers operate concurrently, necessitating a robust transactional layer that prevents conflicts and ensures atomic visibility of new data. This is where ACID compliance play a crucial role in maintaining data integrity.

Large Table Counts (10k–1000k)

Many modern data platforms host tens or hundreds of thousands of tables in a single environment. The metadata catalog must simultaneously scale to track table definitions, schemas, and operational details for hundreds of thousands of tables.

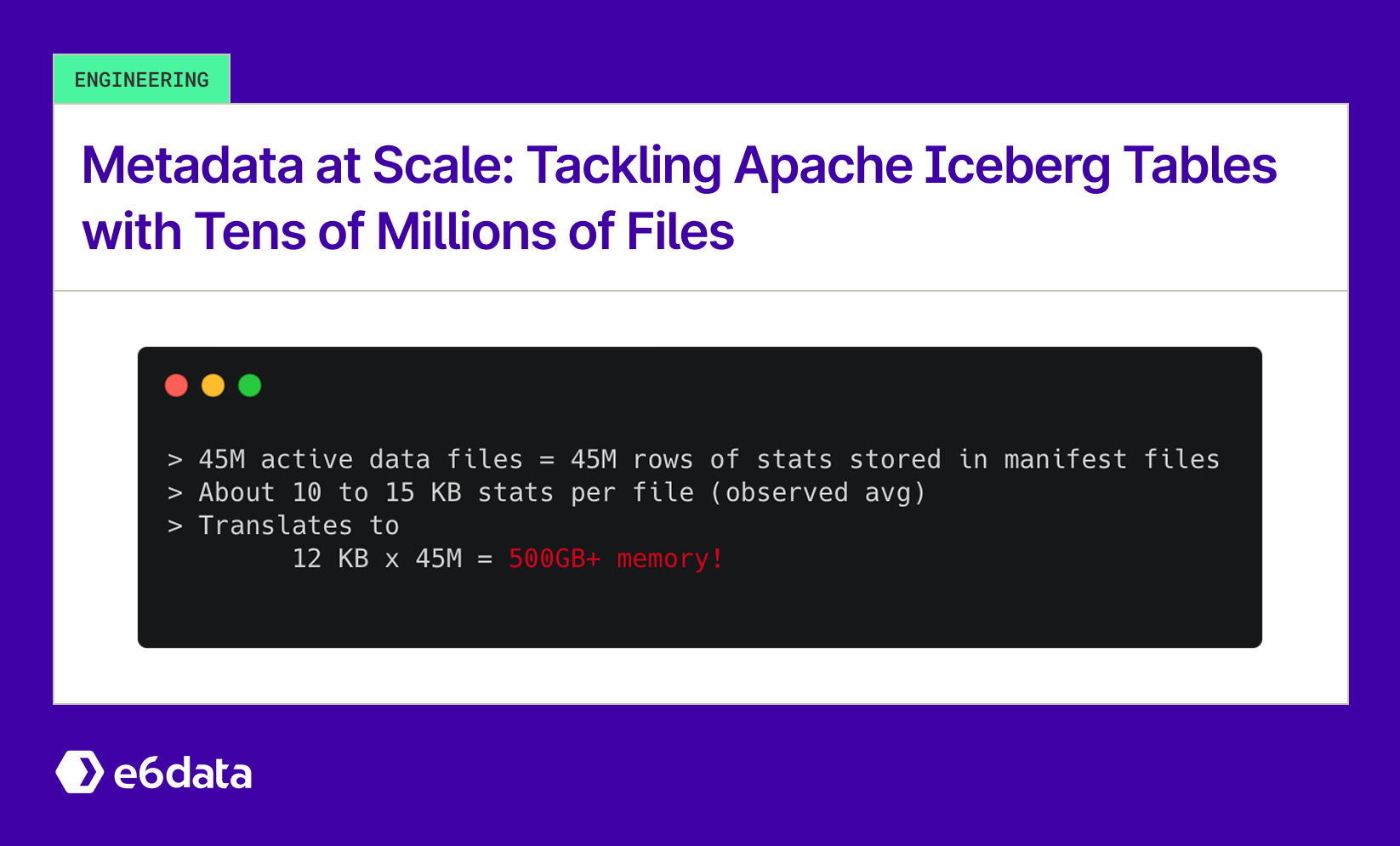

Huge Number of Files/Partitions per Table

Each table can have thousands or millions of Parquet files and partitions, especially in streaming or micro-batch ingestion scenarios. Managing partition boundaries, file paths, and associated statistics at such a scale is a significant challenge for the catalog.

Fine-Grained Partition and File-Level Stats

Query engines rely on partition pruning and file skipping to accelerate queries. Storing and querying these statistics at scale turns the catalog into a data-intensive platform, often requiring indexing and caching strategies. This level of detail is essential for efficient data discovery and optimized query performance.

As a result, the low-level catalog must be architected like a distributed metadata service. It may use scalable storage backends, caching tiers, and clever indexing structures to handle immense concurrency and volume.

Query Engines as First-Class Consumers

In the lakehouse, query engines are no longer passive readers of simplistic metadata. They are active, first-class consumers of a rich metadata ecosystem. By accessing fine-grained stats and snapshots, engines can:

Perform Fine-Grained Pruning

Instead of scanning an entire dataset, engines skip irrelevant files and partitions, cutting computation and cost. This is particularly beneficial when dealing with semi-structured data and unstructured data in the lakehouse.

Adapt to Schema Evolution Seamlessly

Engines consult the catalog’s record of schema changes. They can handle new columns gracefully and query older snapshots without manual intervention.

Enable Time-Travel and Incremental Queries

Engines can run queries against historical snapshots or incremental changes, enabling reproducibility, debugging, and compliance checks. This capability is crucial for data lineage tracking and maintaining data security.

This engine-centric reliance elevates the catalog from a footnote to a main character in the data pipeline narrative, making it an essential component of lakehouse analytics.

The Future: Enriching the Catalog for Greater Performance

What if catalogs could do more than just keep track of files and partitions? As we embrace the lakehouse paradigm, the metadata layer itself could become a strategic asset—actively shaping query plans, speeding up incremental data reads, and even learning from usage patterns to refine performance over time. By moving beyond passive bookkeeping, the catalog can evolve into a powerful engine of optimization, anticipating and adapting to the ever-growing scale and complexity of modern data workloads.

Incremental Snapshots

Beyond full snapshots, catalogs could store “incremental” metadata that tracks changes since a given snapshot. Engines can use this to quickly identify newly added files or dropped partitions, making incremental queries more efficient.

Partition-to-Datafile Mapping

Detailed indexing structures could map each partition to its data files in a queryable format, letting engines pinpoint relevant chunks rapidly. This could be exposed as a specialized API, enabling engines to fetch partition-specific metadata in a single call.

Queryable Parquet Statistics

Rather than just storing statistics in binary manifest files, catalogs could serve them in a structured, queryable manner. Engines might directly issue metadata “queries” to the catalog, retrieving min/max values, distinct counts, or bloom filter indicators for a given column. This metadata query capability could further reduce data scanning and improve performance by several orders of magnitude.

Dynamic Metadata Optimization

In the future, catalogs could use machine learning techniques to reorganize or optimize their metadata layouts over time. The catalog could propose better partitioning strategies or caching policies by analyzing usage patterns and query statistics.

Conclusion

What started as a simple internal component in the traditional data warehouse world has blossomed into a foundational infrastructure piece in the lakehouse paradigm. The low-level metadata catalog is no longer hidden; it’s a central player that must continuously scale, adapt, and innovate. By serving rich, granular, and queryable technical metadata, this catalog empowers multiple engines to work more efficiently, reliably, and autonomously on shared datasets.

As the ecosystem matures, we can expect these catalogs to become even smarter—providing incremental snapshots, partition-to-datafile mappings, queryable Parquet statistics, and dynamic optimizations. These advancements promise to simplify query engines’ lives further, pushing the boundaries of performance, scalability, and flexibility in the ever-evolving lakehouse future.

The catalog’s journey from afterthought to hero is just beginning. Its evolution will shape how we store, query, and govern data for years. As we continue to explore the potential of data lakehouse examples and transactional data lakes, the role of these low-level metadata catalogs in managing our data assets will only grow in importance.

Frequently asked questions (FAQs)

We are universally interoperable and open-source friendly. We can integrate across any object store, table format, data catalog, governance tools, BI tools, and other data applications.

We use a usage-based pricing model based on vCPU consumption. Your billing is determined by the number of vCPUs used, ensuring you only pay for the compute power you actually consume.

We support all types of file formats, like Parquet, ORC, JSON, CSV, AVRO, and others.

e6data promises a 5 to 10 times faster querying speed across any concurrency at over 50% lower total cost of ownership across the workloads as compared to any compute engine in the market.

We support serverless and in-VPC deployment models.

We can integrate with your existing governance tool, and also have an in-house offering for data governance, access control, and security.

.svg)

%20(1).svg)

.svg)

.png)

.svg)

.svg)

.svg)

.svg)

.png)

.svg)